ChatGPT said: AI tools are becoming part of the team — not just software, but contributors with real output and real impact. If we want them to deliver value, it’s time to start managing them more like humans: with onboarding, feedback, performance checks, and the occasional tough conversation.

Remember when you used to have a Personnel Department? I know some of you are giving me a blank look, but a few of you nodded. A handful of you even remember post-war ‘Manpower Management’ teams: the people who became your Personnel teams.

Back then (for UK readers, I’m talking about the world as it was before Channel 4 existed) management was all about process: recruitment and payroll. But eventually everyone rebranded their Personnel teams as Human Resources — because it finally occurred to us (starting in the 70s then spreading inexorably around the corporate globe) that people aren’t just worker drones: we are humans. Assets to be managed and nurtured, alongside capital and equipment, sure, but: different.

Now that we’re in a new AI-powered industrial revolution, the workplace is shifting again. Your team probably still includes plenty of humans (although, if you’re in a warehouse or factory, they might already be outnumbered by robots). Either way, you probably also have algorithms, agents, and automated workflows, and you’re adding more. Where your AI works best, it’s part of the same organisational machinery. That means it’s contributing output, needs oversight, and — if we’re honest — sometimes needs performance management. Maybe your AI agents aren’t leading your teams yet, but they are already working as your leaders’ beloved assistants.

One of our favourite customers has convinced me it might be time for a new organisational function to help us keep pace with the new reality. We’ll let you keep calling this function HR, but it might need to stand for Hybrid Resources: an enabling team that helps us to get this right for the future. You could also go with TR: Total Resources. Or Humanish Resources? Anyway: let’s talk about HR 2.0.

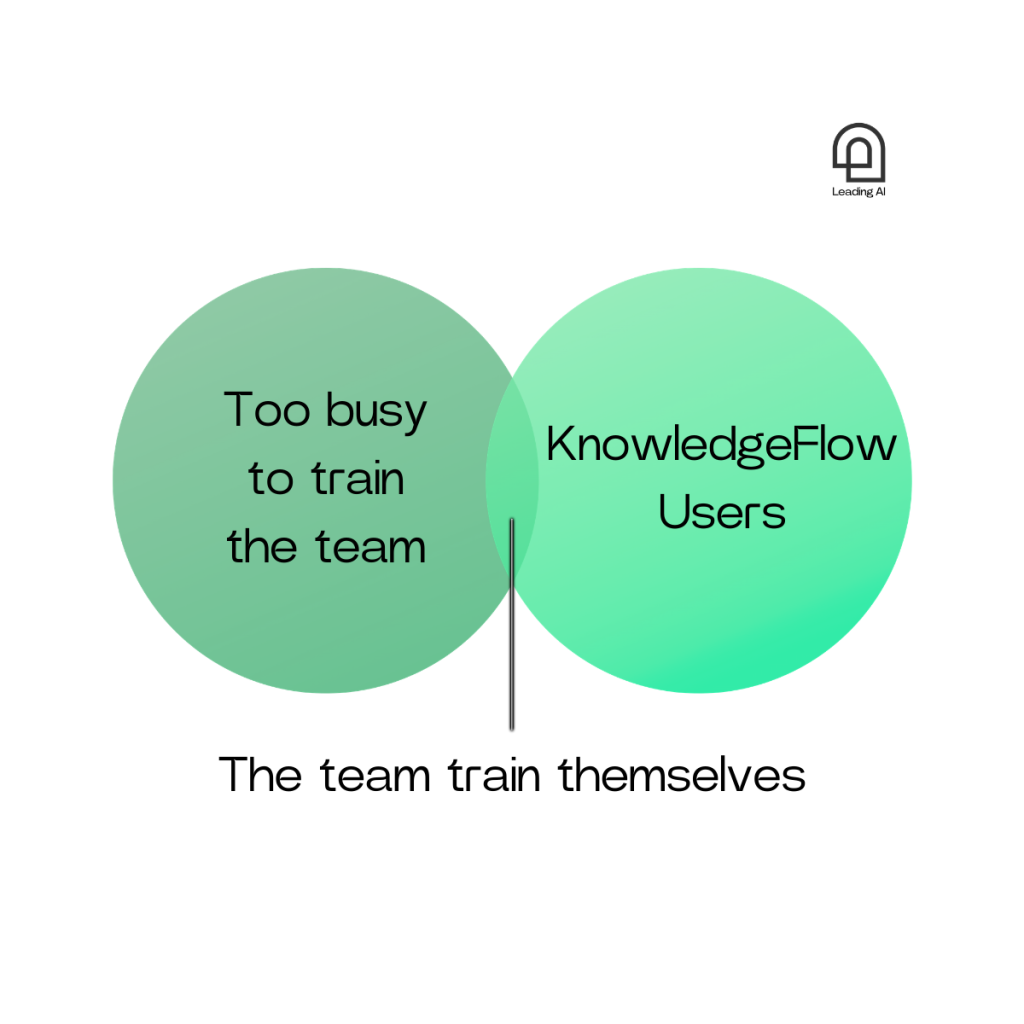

Whether you call your generative AI tools colleagues, co-pilots, or code, the truth is that this is more than just deploying a new app. A lot of your AI needs the same good management as people do — it needs you to be right-sizing roles, onboarding well, reviewing performance, and creating a few checks and balances to manage their impact on everyone around them, on your bottom line and on the planet.

What good management means: lessons from HR 1.0

The best managers know staff succeed when they have:

1. Clear role definitions – sometimes this is about clarifying tasks or procedures, but mainly it’s about knowing what you’re accountable for and how success is measured.

2. A good onboarding process – people work best when you give them the tools, context, and contacts they need to deliver quickly.

3. Regular check-ins – performance issues should be caught early, feedback should be timely, and successes need to be recognised.

4. Professional development – everyone needs the opportunity to learn new information and skills, and adapt to change.

5. Workload balance – enough stretch to stay engaged, not so much they break.

6. Alignment with organisational values – you want to nurture the right behaviours and attitudes, and you want to motivate people to stay on task by helping them see how their work supports the bigger picture.

Our big, obvious insight is simply this: these principles map almost perfectly to AI. Ignore applying them to your artificial assistants, and you’ll get patchy results and wasted investment.

Meet the team

Some specialist AI assistants are already embedded into your team as your most imaginative marketing intern — full of ideas, quick to create and great at breaking the blank-page panic. Tools like ChatGPT, Jasper AI, Canva, or Runway ML can spin up campaign concepts, copy, and visuals in minutes. The trick is giving them a clear brief and guardrails so their shiny brilliance doesn’t drift off-brief owing to their lack of true originality and grasp of context. You also need to teach them about IP and the rules of engagement when they produce work for your brand.

A lot of your other assistants are more like the all-rounder management consultant or classic grad scheme generalist — smart, adaptable, and happy to turn their hand to most tasks. ChatGPT Enterprise, Claude 3.5, Perplexity, and Gemini Advanced excel in this space, for the most part.

If you are buying this analogy at all then you also know that form should follow function: whether it’s people or AI, you need to bring in the resource you need for the job you need doing. Onboard it well, then develop and adapt it as required. It’s not all on your tech team to just install something.

(And don’t kid yourself that you can run an organisation on generalists alone, however smart they are. Take it from me: our skills are impressive and broad but sometimes shallow as. When something needs an expert, we’ll call one… if you’re lucky. If you’re unlucky, your generalist will just have a go anyway and produce something sub-par – or, worst case, pass off someone else’s smarter contribution as their own. This happens in AI too. Just ask anyone who’s tried asking Copilot for some code or ChatGPT for a flowchart and seen some pretty ugly results).

What comes next

The elephant in the room is, when you say you should ‘always’ keep a human in the loop, that’s as mad as trying to micromanage a sentient human team: you become the bottleneck that slows everything down. You miss the productivity bonus of empowering everyone to work effectively, at pace, everywhere, all at once – and you will miss their original insights and innovations each time you make decisions. And, frankly, micromanagers are always ultimately delusional: your team are definitely doing and saying things you don’t know about, and they’ll keep more hidden from view if there’s a hefty penalty (time, effort, stress) each time they involve you. You have to trust people and assume competence almost all of the time.

Likewise, you are quickly going to reach a point where you cannot supervise every AI-powered process. In lots of ways, we’ve already passed that point. According to the experts at Gartner, AI supervisors will – increasingly – come into play: models tasked with monitoring other AI for things like drift, bias, performance, and energy use.

Every generative AI system you already use does better when you challenge it to look for more, ask it to check its own output, or look for more sources. AI is very capable of supervising and adding value to another AI when you tell it that’s the job it has to do. AI supervisors are inevitable: over time, you might need fewer team members and team leaders.

It’s okay. Breathe. You already take your hand off the wheel each time you let an algorithm find you some information, and you will keep taking little steps back as AI tools get better and faster. Our advice is: step back consciously, and apply the handbrake before you reach the edge of the cliff. Let people and AI do their thing, with your support, but set the standards, engage your critical brain, and know where your red lines are to maintain accountability where it matters.