I want to nominate something for “fastest-growing AI risk”, in the hope we can work together to manage it: complacency.

The AI early adopters — folks who have embedded generative AI use into their daily work routines (me and, I’m guessing, you) — tend to think we’ve got a pretty good grasp of the basics: when to use AI, how to prompt it. Unfortunately, the signs suggest we’ve still got further to go than we think.

I have two key pieces of evidence. First, we haven’t unlocked enough of the enormous productivity gains available at an organisational level. In fact, in some cases, we’re creating new burdens instead. Second, “What time is it?” was a trending prompt on Perplexity last Sunday morning, which is every kind of wrong.

Now, I know it wasn’t any of you. I’m not even angry; I’m just disappointed. But at a bare minimum, I think we can all agree it means we are – in general – not always using the right AI tools for right the job, in the right way.

So, harnessing my annoyance for practical use, here are three golden rules for AI that bear repeating.

Rule 1: Right-size your AI use — for the sake of planet and your brain

We can all enjoy the insanity of asking Perplexity the time while it’s displayed in the corner of the screen you’re already staring at, but it reveals a lot about how we’re using these tools. AI is powerful, but it isn’t magic, and it isn’t trivial.

Generative AI, particularly large models, has distinctly non-trivial environmental impacts. Training and inference consume a lot of electricity, generate e-waste, require water for cooling, and rely on resource-intensive infrastructure. MIT News and the UNEP spell this out starkly.

On the human side, ‘cognitive offloading’ is bad for your brain: overusing AI for low-friction tasks dulls curiosity and erodes memory. In other words, it does you good to work things out for yourself sometimes.

So: use clocks, calculators and dictionaries for the little jobs they’re perfect for. Save generative AI for when you need messy information synthesised, creative leaps, or help with content quality. Even embedding the question “Is this prompt worth the AI energy cost?” as a mental checkpoint would make a difference. If enough people paused to ask it, the planet might thank us.

Rule 2: AI at work still needs real change management

Here’s what happens when you drop a “just use this AI tool” into workflows without structure: you get AI slop, duplication, confusion, and extra layers of checking.

This recent Harvard Business Review piece set out how “workslop” is seeping into offices, adding hours of cleanup rather than saving hours of drudgery. Many AI pilots fail to yield efficiency gains at all — one review found 95% delivered no productivity improvement. Famous AI blunders (biased dashboards, hallucinated policies, bots giving bad legal advice) show what happens without guardrails: things go sideways fast, and humans are left managing the fallout. The glow of the initial time saved quickly fades.

Literally everything you ever learned about changing practice and culture applies to AI adoption. Set clear objectives. Choose your use cases carefully. Train people properly. Build feedback loops. Keep humans in the loop where accountability matters. If you don’t, you’ll compound the cost rather than reduce it.

Rule 3: Always remember what your AI is for — and use the right one

Every AI tool has its sweet spot. The mistake people make is lazily lumping them all together and then wondering why the output is disappointing.

Perplexity is great for finding information. ChatGPT and Claude are consistently strong at generating and adapting language, and at coding. Gamma will help you turn notes into a slide deck. Napkin will give you a cleaner, simpler infographic than any of those tools I’ve just listed.

The trick is remembering your role. You’re the one who sense-checks the output and decides what’s fit for purpose. That’s the real job — knowing what the right content is, and only sharing that. Yes, “content generator” may now be a career option your children are seriously considering, but even that job shouldn’t end the moment you have something to share online. Your real purpose relies on remembering the critical importance of making professional judgements and good choices. AI can only be as smart as the humans directing it

Lessons from lazy Sunday mornings

While I was busily compiling a mental note of the 74 ways in which asking Perplexity the time might demonstrate how rapidly the world is descending to hell in a handcart, my friend messaged to tell me she was having better luck with the same tech. In what may be the ultimate first world problem, she had turned to Perplexity when the steam nozzle on her coffee machine was playing up, rendering her milk unfrothed and disappointingly tepid. Turns out it just needed descaling; the AI quickly helped her save time and money by getting straight to the diagnosis and solution.

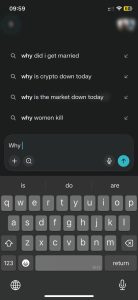

In the process, a few more use cases popped up, when my friend first started to enquire about her coffee woes:

Her screen grab provides quite an insight into the questions that play on peoples minds of a Sunday morning. I find it hugely reassuring on two levels. First, it means there are couples lying in bed of a Sunday morning with some real issues while I am living a relatively carefree life in comparison, for which I am grateful. And my friend is a therapist, so this bodes well for ongoing levels of demand for her services. Second, lots of people have indeed learned that the right AI is really good at getting evidence-led answers to some pretty complex queries. It’s actually much better at those than it is at telling the time. So… progress!