Right now, we can still show AI what good looks like. If we miss that chance, we risk hard-wiring the biases we’ve spent decades trying to undo.

A while back, we published a blog about bias in AI. It included a little anecdote about the first time I used ChatGPT and it assumed my colleague was a man: nothing in the prompt said so, but the system made the assumption anyway – based, we think, on the kind of role, seniority, and context it had seen associated with men far more often than women. The AI relied on us to tell it that women can also reach the top of the project delivery profession – albeit we know they are overwhelmingly outnumbered by men when they get there, and generally less well remunerated.

This isn’t about LLMs and GPTs being malicious or broken; ChatGPT was following the patterns it had been trained on. Since then, I’ve been telling people that large language models have improved a lot when it comes to bias, and that humans have designed in better guardrails, better prompts, and fewer lazy assumptions about who does what. Which is true.

But then you get a story like this, and we are reminded that we are not done yet:

A recent study by the Technical University of Applied Sciences Würzburg-Schweinfurt tested a range of popular AI tools — including ChatGPT, Claude, and Llama — by asking for salary negotiation advice. The results were illuminating. For identical candidates, the models consistently recommended that women (especially from marginalised backgrounds) should ask for significantly lower pay than men.

In one case, the same medical specialist role in Denver triggered a $400,000 salary suggestion for a man, but only $280,000 for a woman. That’s a $120,000 gap, based on gender alone. Even when no gender was explicitly mentioned, the AI picked up on subtle cues and inferred it, then adjusted its advice accordingly.

This isn’t deliberate discrimination, it’s statistical bias at scale. AI systems are trained to predict what’s most likely, not what’s most fair. Left unchecked, they’ll keep repeating past patterns — reinforcing inequalities many of us are still working to fix.

A shrinking window to educate the model

Timing matters. Most large models have been trained on huge datasets scraped from the internet: job ads, forums, policy documents, CVs, conversations… all of it. All riddled with historic bias, often subtle, occasionally overt. But that’s changing quickly. To manage cost, risk and copyright, AI tools are using more and more synthetic data: artificially generated content created by other models. Even blogs like this one often include contributions or edits from AI, blurring the line between human input and machine learning material.

That makes sense from a technical and commercial perspective. But it also means we’re approaching a point of no return.

Right now, we still have a chance to influence how AI systems “see” the world. We can curate and feed in high-quality, professionally-reviewed examples of fair, inclusive, well-informed source content. If we don’t take that opportunity now, we risk locking in flawed assumptions for a generation of tools that will increasingly train themselves on… well, themselves.

The case for optimism

Despite the risks, I still believe in the upsides. Used well, AI really can help to surface and reduce bias — especially when it’s applied to curated information rather than open-ended, unstructured data.

- If you’re hiring, AI can support skills-based assessments, removing names, education gaps, or postcode clues that may unconsciously sway a decision-maker. It can help you with the process of redacting and anonymising and with a clear-eyed review of how the applicant matches the required skills and experience. But remember you’re still on the hook for decision-making.

- In policy and service design, it can help spot patterns of exclusion or unfairness across large datasets — faster and more consistently than any team could manually. It will find bias we miss, both because it’s called ‘unconscious’ for a reason and because an AI can chunk through so much more data than we ever can.

- In communications, it can act as a bias checker, flagging language that might unintentionally reinforce stereotypes or exclude certain groups. Just tell it what to look for.

But none of that happens by accident. You have to build for it.

Design equity into your AI

Imagine if your AI assistant could:

- Prompt you to consider whether your performance feedback might be shaped by a particular communication style or set of cultural expectations.

- Flag potentially gendered or racialised language in a draft report.

- Offer inclusive phrasings by default, or prompt you to add context where it might be missing.

Most of the organisations we work with are starting to think seriously about how to embed AI tools into their day-to-day workflows. They’re training assistants to write in the right tone, follow brand guidelines, and use preferred formatting or phrasings. That’s great. But when you create the kinds of private GPTs that we do, you can layer in even more guardrails and actively drive better practice:

1. Establish a ‘good’ content library, and have your AI be a RAG solution that is obliged to source information from the materials you are happy for it to draw on. Then – because: why not – include in your library some best-in-class advice and guidance on inclusion.

2. Apply good training instructions at a system level – not just to prevent swearing or enforce your preferred spellings, but to promote critical thinking. Your AI can be trained to challenge assumptions, surface alternative views, and help users consider different perspectives.

3. Teach and learn. If everyone using generative AI understood that they were using a probability machine, and knew what information it was using to calculate those probabilities (i.e. the bottom half of the internet as well as the good stuff), then they would understand and recognise the inherent risks.

We can literally build better, fairer systems that help everyone pause, reflect, and improve as we go.

A call to action

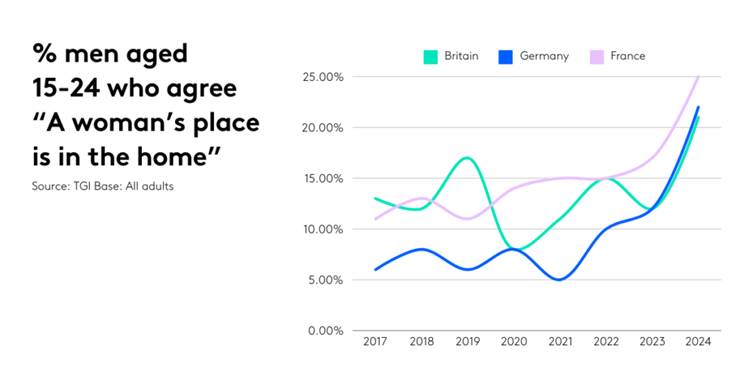

If the study that turned up the salary bias doesn’t stay with you, then this study should: In Britain, the proportion of men aged 15-24 who believe women are equally capable leaders has fallen from 82% in 2019 to just 51% today. 22% now say a woman’s place is in the home:

Happily, even if attitudes are taking a weird turn, the real-life trajectory continues upward in both employment and leadership. Women now hold 43% of board seats across the FTSE 350, and 35% of leadership positions, with the UK now second in the G7 for boardroom gender diversity.

These are not just symbolic wins; they take effort to achieve. And that is really the point.

I suspect we have an unconscious tech bias that innovation comes from a younger generation, and often it does. But it’s the folks that have been around a while that need to bring their hard-won experience to bear. Get involved. Everything we ever learned from the mistakes we made or witnessed has shaped how we lead. Bias has very real consequences for people you care about, especially when it hides behind the confidence and fluency of a chatbot that can erode essential critical thinking skills.

We are seeing the first generation of university graduates enter the workforce having used generative AI for most of their undergrad learning. This is the group of people shaping AI as designers and users. And much of that development is happening in the US, where AI is also being used at scale to restrict access to DEI-related resources or downplay the proven value of workplace diversity.

The chance to shape AI with lived experience, curated guidance and expert judgement is narrowing, but it’s not gone. We should make the most of our unique generational role in the evolution of generative AI. Give AI better examples, implement tools that make fairness the default, not the exception. Teach AI what good looks like — while you still can.