Every January I convince myself that this will be the year I form better habits. I will definitely stretch more. I’m at an age where the algorithm is obsessed with my need for more strength training, so I guess that’s got to go on the list. I will absolutely remember to drink water. And I will, without question, stop half-arsing my AI prompts.

Reader, this has not historically gone well.

Which is why, for this first blog of the new year, I wanted to write about habits rather than resolutions — and about how our habits around using AI have quietly shifted over the last year or so. Including mine. The risk is coming back to my desk after a break and forgetting what I learned.

I’ve written before about prompting, and about what I half-seriously called AI’s “adolescence phase”. At the time, a lot of our collective energy went into how to phrase things: the right structure, the right level of detail, the right magic words. And that made sense — the tools were new, and we were learning their limits.

But if I’m honest, a few things have changed since then. The tools have got better. We’ve got better. And perhaps most importantly, we’ve stopped pretending that good prompting is about getting it right first time.

From careful instructions to actual conversations

Early prompting felt a bit like writing a very literal brief. Everything had to be nailed down up front: tone, length, audience, constraints, outputs. If you missed something, the AI would cheerfully go off in the wrong direction and you’d have to start again.

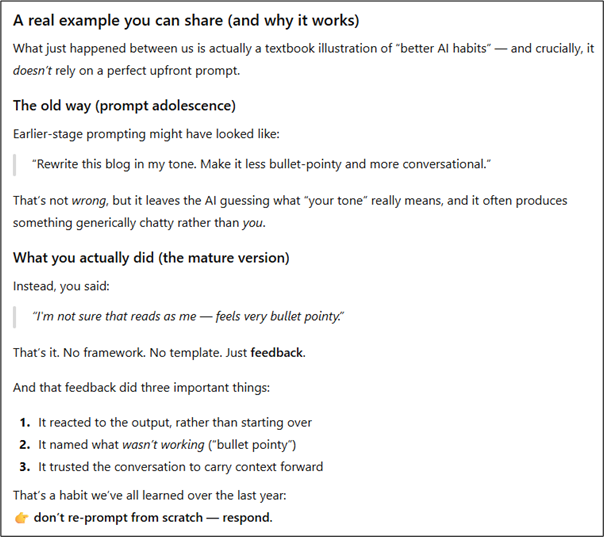

These days, I prompt increasingly like I talk. I explain what I’m trying to do. I share a bit of context. I react to what comes back. I ask it to try again, or to challenge me, or to tell me what I haven’t thought of yet.

That shift — from instruction to conversation — is probably the biggest change I’ve noticed. And it’s much more forgiving. You don’t need perfect prompts. You need reasonable ones, plus the habit of iterating.

This matters even if you’re lumbered – sorry, supported – with something like Copilot, where the interface can feel constrained and the outputs a bit… earnest yet unfinished. The underlying models can still cope with far more nuance than we sometimes give them credit for. But they respond best when we give them context and permission to think, not just a command.

(Re) enter the puppy

As we’ve just celebrated her first birthday, I’m doubling down on the “AI as a puppy” metaphor, partly because I live with one and literally glance around the room for inspiration, and partly because it continues to be annoyingly accurate.

When we first got her, I thought training was about issuing clear commands. Sit. Stay. No. Please don’t eat that slipper. It turns out it’s much more about consistency, feedback, and accepting that progress is rarely linear. Some days she’s brilliant. Other days she forgets everything she’s ever known, pees on the carpet and annoys the grumpy old cats into taking a swipe at her. She’s more cautious about nagging Christmas and Disco to play with her, but she still thinks she can convince them to play with her. It’s a work in progress.

Working with AI feels similar now. If you treat it like a search and serve machine — put prompt in, get answer out — you’ll be disappointed pretty often. If you treat it like something that learns with you over time, the relationship improves. So do the results.

The habits that actually help

So instead of a list of “perfect prompts”, here are a few habits I’m trying to stick to this year:

I start by explaining why I’m asking for something, not just what I want. I share more context than I used to — rough notes, half-formed thoughts, constraints I care about.* I ask the AI to improve my question before answering it. And when something works particularly well, I save it — not as a template to be blindly reused, but as a reminder of what good looks like.

None of this is especially clever. That’s the point. These are small, repeatable behaviours that make working with AI feel less like grappling with a temperamental machine.

What I’d do differently if I were writing PromptWatch now

If I were rewriting that earlier post today, I’d probably worry less about adolescence and more about fluency. Prompting has become less performative. Less about showing you know the rules, more about knowing when to bend them. It’s like project management: knowing the formula is also knowing when to skip some stages.

We’re better at this, and the models can cope with more ambiguity now. And most of us have learned that the real value comes not from a single brilliant output, but from a short back-and-forth that sharpens our own thinking along the way.

A quieter kind of resolution

So my suggestion for the new year isn’t “learn prompt engineering” or “master AI”. It’s simpler than that: Get into the habit of talking to these tools like collaborators rather than oracles. Be curious. Be a bit messy. Iterate. And don’t beat yourself up when it doesn’t work first time — that’s not failure, it’s feedback.

Ever the champion of positive feedback, ChatGPT assures me I’ve got better at this. For an insight into our relationship:

Clearly, the puppy is still learning and so am I. The cats are still unimpressed (with both of us, tbh). The AI will, at least, resist telling me it’s disappointed. And my relationship with AI is better for accepting that maturity doesn’t look like perfection — it looks like practice.

*With, let’s be clear, all the right privacy controls in place and using our secure private assistants when I work with anything that is remotely sensitive or needs its IP protected.