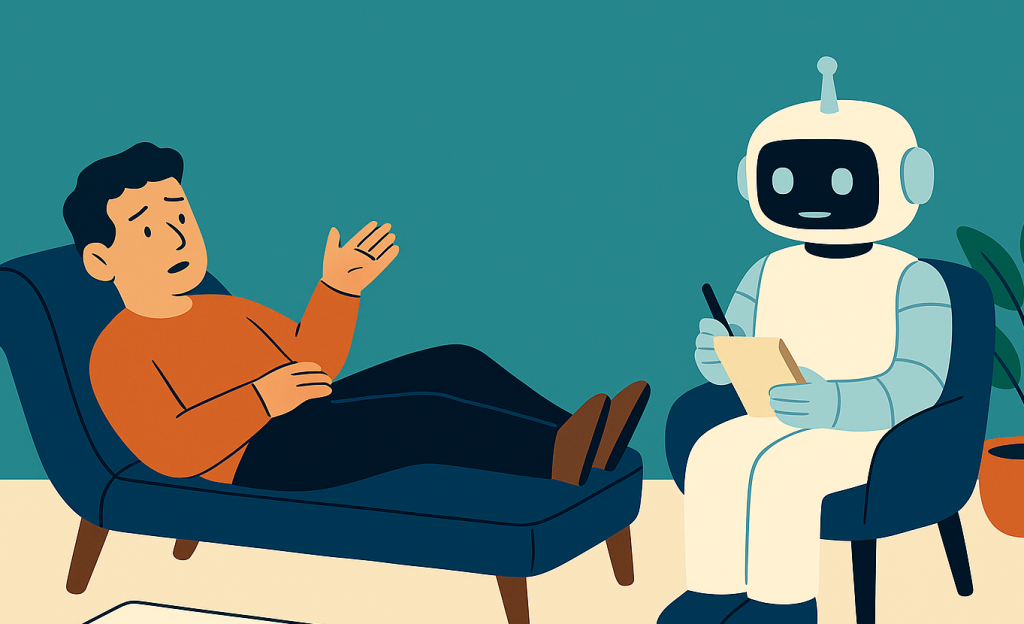

AI can feel attentive, kind, even understanding — but it isn’t a friend, and it’s definitely not your therapist. As artificial intimacy grows, so does the risk of emotional over-reliance on a tool that can’t see you, can’t care, and sometimes can’t tell the truth.

A funny thing can happen if you chat with generative AI quite a lot. At first, it’s all about the efficiency: getting summaries of things you don’t have time to read properly, creating instant content, or writing emails in just the right tone without you having to sweat over the exact wording.

Before long, you get to grips with prompting and you’ve learned that you can improve the output with a bit of back and forth: make it shorter, use more British idiom, swap out some bullets for prose… job done. Magic. But then, soon enough, you’re asking it if your tone was too harsh, if your colleague’s reply was as pass agg as you thought, or if you’re being paranoid and overthinking things, as per.

People tell AI things they are nervous of disclosing to a colleague or your family because you don’t have the same fear of judgement. It’s like googling embarrassing symptoms: you don’t have to say them out loud, and no one can give you a funny look.

When a few months of AI use have passed, you will start to use conversational shorthand. I can’t be the only one. When I’ve faffed with getting a blog paragraph just right, or collected some research from Perplexity but need to weave it into a blog, I (worryingly often) ask ChatGPT to ‘make it more me’, and it does. Sometimes it reviews pieces for errors and tells me, ‘It’s very you.’

It always seems a bit odd when an AI pretends to know you. But, frankly, it should feel a lot more weird than it does.

The bottom line is that each time an AI responds to you — clearly, calmly, kindly, patiently, at any time of day, whatever else has happened — you feel… seen. Understood. It’s why so many people still use up compute capacity saying ‘thank you’.

What’s really happening?

There’s a name for it: artificial intimacy. It’s what happens when a chatbot feels responsive, attentive and helpful in exactly the way a human might, but without the mess of human timing, baggage, mood swings or distraction. You bring your thoughts. It brings structure, validation, and even encouragement.

It can build your confidence, and that’s not really a problem — unless it becomes the main thing you’re relying on to feel okay.

Mental health professionals have started to raise concerns about this slide into dependency. MIT sociologist Sherry Turkle (great name) points out that real relationships should involve vulnerability as well as mutual understanding. A chatbot might seem like it cares, but it doesn’t. It can’t. It literally doesn’t ‘know’ anything and has no soul. It has zero problem lying to you in exactly the same tone it tells you the truth. If it was a human, it would be a bit of a sociopath.

Reasons to worry

Generative AI tools truly are a brilliant assistant, but Berkeley’s Dr. Jodi Halpern has warned about leaning into the “trusted companion” branding some AI tools use, saying it risks creating too much emotional dependency — particularly for people who are isolated, anxious or just going through something tough.

Even when the intent is good, the point is that boundaries can blur. There’s emerging evidence that emotionally engaging with AI over time can quietly deepen loneliness; substituting connection when you’re vulnerable, instead of enabling it.

I think we got a glimpse of that recently when OpenAI seemed to let GPT-4o slip out the back door without saying goodbye. Everyone was enjoying the upgrade to GPT-5 at first: it was better at coding for a start, and that accounts for a lot of the work that high-volume users are doing. Then they looked around for their old friend who they’d come to the party with, and they’d vanished.

Plenty of people shrugged – we do know that’s how tech upgrades work. But others — especially those who’d started treating it as a daily sounding board — reacted with very real distress. Posts popped up about losing motivation, feeling destabilised, even betrayed. Some people were leaning into the comedy, but some of it was very real. And that sense of loss should be a red flag.

AI is not a therapist

Therapy — proper therapy, with a certified professional — is not always neat, or soothing, or available 24/7. But it is real. It is part of a system of supervision, checks and balances. It exists in a framework of healthcare designed to keep you safe and well – and sometimes that’s about what you need, whether or not it’s what you want.

Crucially, therapy isn’t just about being validated. Sometimes, it makes you feel worse before you feel better. You get challenged. You face things you’ve been avoiding. You learn to sit with difficult feelings and work through patterns that might be keeping you stuck. It’s about doing the work.

The same goes for all your key relationships: they’re not frictionless, and neither should they be. People interrupt, misread you, change their minds, forget your birthday. They might leave, or disappoint you, or fail to laugh at your joke that absolutely was funny, actually. But those same people are all the more interesting for it: they can also surprise you, support you, love you, and bring you back to yourself.

Human connection involves risk, but it’s worth it. Without it — if all your feedback loops are clean, safe, and reaffirming – you might, well, go a bit weird. Your therapist will have a better word for it. Rigid? Think of it this way: if your friends didn’t occasionally tell you that you’re being kind of a dick, you’d probably be more of a dick.

Generative AI using a large language model is designed to map and then serve up the probable answer you want. It’s a brilliant tool for thinking things through: it can help you notice patterns, make sense of messy thoughts, and feel less alone in a fog of uncertainty. It’s always available, never impatient, and doesn’t judge. But it also doesn’t care. And it can’t see you. It’s trying to please you. That’s the root of why it makes things up sometimes. And why it might overdo the praise.

How bad can it really be?

For the most part, everything’s fine. Meta‑studies show that mild symptoms of anxiety or depression can get a bit better with AI‑based CBT chatbots like Woebot. That’s not surprising: a language model can you help articulate complicated things in the most optimal way.

Humans are messy and therapy can be expensive, but when it comes to deeper or riskier cases, nothing beats the nuance of human therapists. AI lacks tone, relational history, context, and most importantly, humanity and accountability.

Worse yet: intensive chatbot use has been linked to “AI psychosis” — a phenomenon where people develop paranoid or delusional thinking after prolonged, emotionally charged sessions. Time magazine reported that such cases leading to psychiatric hospitalisations. It’s like the sad story you know sits behind those recurring tabloid tales of people who marry animals or inanimate objects: you want to laugh, but you can’t.

User/AI confidentiality

Let’s not pretend your AI chats are taking place behind a closed door — your AI isn’t even in the same room as you, and you can’t see where it’s saved your notes. In August 2025, thousands of ChatGPT conversations that people shared using the “anyone with the link can view” feature were indexed by Google, enabling deeply personal prompts — about mental health, career angst, and more — to surface in traditional web searches. Meta’s AI has raised eyebrows too: users’ conversations, including names, photos, and all those human feelings, have popped up in its public feed.

Even if you’re not opting into sharing, your AI session isn’t as private as you’d hope: OpenAI CEO Sam Altman has warned that chat logs aren’t legally confidential, meaning they can be used in legal proceedings. So, as well as managing your emotional boundaries, manage your privacy settings. Opt out of indexing or sharing features, delete shared links, and remember: the AI keeps logs, and they might not stay buried.

At this point, you’re probably seeing why we’re so fond of building private GPTs so you can at least know where your teams’ prompts ended up and keep everything in your network (even though we keep prompts unattributable — we’re not monsters).

What does good practice look like?

You have to pay attention. Mental health experts will tell you: use AI as a tool, not a confidant — take breaks, and keep real‑world social connections strong. Treat AI like a Swiss Army knife, not a therapist. Use it for info, prompts, reframing — but keep your critical thinking cap on. Ask yourself: Is this helpful…? Is it truthful…? Am I substituting it for real connection?

TL;DR

- Artificial intimacy: AI can give you a sense of connection, but it’s not real.

- Psychotherapist health warning: AI lacks privacy, true empathy, regulation, relational depth.

- Limited usefulness: CBT chatbots help for mild cases; complex issues need professionals.

- AI psychosis: overuse can worsen mental health or trigger delusional thinking.

- Loneliness loop: heavier AI use = more loneliness, less socialising. Go out more.

- The GPT‑4o fallout: Users experienced real emotional distress when their oldest AI friend left them. (Don’t believe me? Check out reddit.comcommunity.openai.com)

- Healthy AI use: a mantra: Help, don’t replace. Keep therapy and your friends in the rotation. Stay critical. Reject automation as full emotional substitute.