Once upon a time, blockchain was the tech that was set to change everything. It was going to topple traditional banking and end corruption. But aside from a few pioneers, it never quite took over.

Then came generative AI; another revolution and a promise to change everything, and another uneasy relationship with truth and trust. And a much faster journey to the mainstream.

When people talk about using blockchain to improve generative AI, it feels to me a bit like watching two people with commitment issues start a podcast together. You wish them well, but you suspect it might not last.

What blockchain actually is (for those of us who nodded politely through the last decade or so)

Unsurprisingly, much of blockchain’s early innovation came from the industries that test-drive every new tech first: gaming, gambling, and yes, the adult entertainment industry (again).

One of our founders is a blockchain enthusiast. He’s explained it to me a few times and sees endless possibilities. Each time, I’m completely bought in, but then the sense of what we can actually do with it never quite sticks in my brain. It’s why I’ve written this; like my GCSE revision all over again. If I do a bit more reading and make a few notes, it’ll fix in my head more permanently. Probably. (And, honestly, that’s the motivation behind a lot of these posts: my inability to retain information unless I force myself to write it down.)

Anyway, for the uninitiated: a blockchain is basically a digital ledger — a live list of records stored across lots of different computers all at once. Instead of one central system (like a bank, or one company’s servers) holding the master copy, everyone in the network keeps their own copy. When something new is added (like a payment, or a change to a file), the network checks it’s valid. Only when everyone agrees is it written into a new “block” and linked permanently to the previous one, creating a single, agreed version of any process.

Each block carries a unique digital fingerprint of the one before it, forming a chain that can’t easily be changed or faked — hence, blockchain. It’s designed to make trust a kind of obligation and shared responsibility.

What can it do that other solutions can’t?

Aside from outstanding version control, blockchain has some real capabilities. It can prove where something came from, who touched it, and what’s been done to it. It can automate agreements through “smart contracts,” where code releases a payment or permission when certain conditions are met (which is pretty close to letting tech make decisions). It can help manage digital identities. But it’s worth asking how much of that genuinely needs a blockchain. Most of these things can already be done with ordinary databases, legal frameworks, and a sensible permissions system — especially in contexts where the risk of tampering is pretty low, in practice, or the impact of it is minimal or very easy to pick up at the next step. Blockchain really earns its keep when there’s a genuine trust gap: when no single organisation can safely hold the keys, or when everyone needs to see the same record without owning it. And maybe those situations are becoming more common in a world where trust is hard to build.

And – just maybe – that means it’s good for tracking where your AI got its data. But it’s not magic: it’s slow, power-hungry, and can be expensive.

AI and its own trust problems

AI, meanwhile, has a different kind of trust issue. It doesn’t lie on purpose, but it doesn’t tell the truth either. Generative AI just uses probability calculations to generate things that look or sound like the thing that usually follows the other thing. That’s why it starts every email with “I hope this email finds you well”; it’s a mathematically typical way to kick off polite correspondence, regardless of your actual degree of interest in someone’s wellbeing.

A key challenge with trusting generative AI is that we can’t always tell where it got its information, or what it was trained on, or even whether a particular output came from a model or a human. And that’s where most blockchain enthusiasts are suggesting the two tech worlds could meet. In theory, blockchain could record the provenance of AI training data, log when and how a model was updated, prove who created a piece of digital content and keep audit trails for AI-driven decisions. There are other ways to do that, but not with a blockchain degree of certainty.

All of which sounds great — and might be necessary as AI becomes embedded in government, finance or law. We’ve already acknowledged that the “human in the loop” approach won’t scale or deliver reliability, so maybe this is the answer.

The catch

On top of speed, secrecy and flexibility, here’s the real problem: blockchain doesn’t fix our behaviour. It records it.

You could have a beautifully transparent ledger showing every dodgy transaction — and people will still find creative ways to dodge accountability. We’ve seen the same with AI: you can make it explainable, auditable, and even regulated, but none of that guarantees good judgement or integrity.

Practically speaking, most “blockchain for AI” projects today aren’t truly decentralised anyway. They rely on private, controlled systems — the digital equivalent of locking your open-source dream diary in a corporate safe. You’re still relying on the corporation not being Oscorp. Or LutherCorp, if you’re more of a DC person.

Even in places where blockchain does work — and that apparently includes Estonia’s digital government, which uses blockchain-style ledgers to log who accesses citizens’ health or court records, as well as IBM’s Food Trust, which tracks the provenance of food from farm to supermarket — it’s more about traceability than anything else. These systems are governed, managed, and highly structured. Reliable, yes. Revolutionary? Not really.

If you must chain something

The blockchain enthusiasts are right, though. There are promising uses. Content authenticity projects like C2PA (the Coalition for Content Provenance and Authenticity, dontcha know) are embedding digital “content credentials” into images and videos to show where they came from and how they were edited — a quiet but powerful tool against deepfakes.

The BBC used C2PA credentials during the 2024 UK election to verify its footage wasn’t AI-generated. Adobe Photoshop’s Content Credentials now attach a small icon you can click to see an image’s full editing history — stored on a secure ledger. These are potentially vital protections for consumers and creators.

In the public sector, there’s growing interest in blockchain as a way to log model changes or data-sharing agreements securely — less glamour, more governance. And that’s probably the sweet spot: using blockchain quietly, as infrastructure, not ideology.

What trust looks like in practice

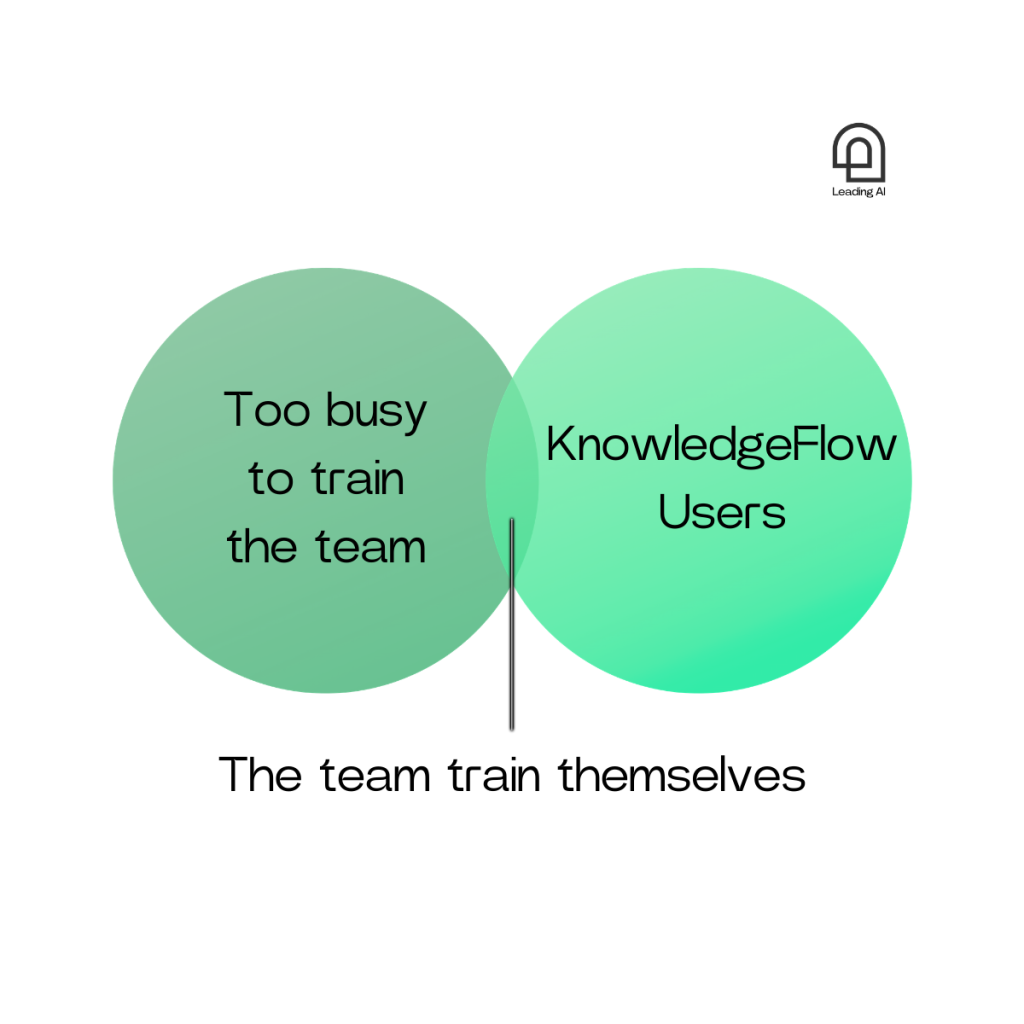

At Leading AI, we think about these questions a lot. We don’t use blockchain (yet) but we work to the same principle of traceability. We love RAG tools because they can easily cite their sources as standard, and our systems are designed to give advice within their limits, not beyond them. That’s how we build trust in practice: not with an immutable ledger, but with visible reasoning you cannot ignore.

It’s also why we encourage people to use Perplexity rather than ChatGPT when they’re looking for information. Perplexity shows its sources, so you can check where things came from and decide for yourself how much weight to give them. That transparency makes it easier to scale your judgement to the level of risk.

We’re not managing a nation’s health records — we’re helping people unlock knowledge that’s trapped in folders. Perfect trust isn’t realistic, but good enough trust is — and that’s what most organisations need right now. Interim answers that are explainable, affordable, accountable, and useful today, while the theory keeps evolving in the background.

In the end…

Blockchain and AI both began in part as a revolt against centralised power. In practice, both have relied pretty heavily on big players, opaque systems, and a lot of human trust.

If you need a reminder that provenance doesn’t equal value, look no further than the NFT boom — the great experiment in buying digital certificates that said “you own this thing on the internet.” It proved something happened; it didn’t prove that it mattered.

Technology can record trust; but only people can earn it.