Recent rulings in the US, UK and EU point to a simple distinction: using AI to help create is fine, but letting it do all the work? Not so much. Just like you can borrow ideas from a library but can’t photocopy a whole book and sell it, AI is a tool – not a substitute for authorship. The direction of travel is clear: transparency, human input, and secure practices matter more than ever.

You will have noticed more noise than usual lately about AI and intellectual property: who owns what, what can be protected, and whether using AI means accidentally giving away your work. Some of it feels confusing – some of it feels very shouty – but recent legal rulings, especially in the US, UK and EU, are starting to make things clearer. Don’t be fooled into thinking it’s too complicated to engage in right now: common sense is emerging from the legal fog.

We already understand that we can go into a library, read everything, and use what we’ve learned to write something new. You can even quote bits of what you’ve read, as long as you give credit. What you can’t do is break in at night, photocopy a whole book, put your name on the front, and sell it.

That’s more or less the distinction that’s emerging in legal guidance around generative AI. And it’s the one organisations need to keep in mind as they start to build tools, generate content, and think about how to use AI responsibly.

Why this matters

One of the big legal questions is about ownership. A US court recently confirmed that purely AI-generated works — those created without meaningful human input — can’t be copyrighted. The UK and EU take a similar view. Copyright, and most other IP protections, are designed to reward human creativity and judges are all about applying the spirit of laws. So if you’re using AI to help with your work — say, by summarising documents or suggesting drafts — the human involvement still counts. But if the AI is doing all the work for you, you shouldn’t claim ownership.

The second big area of legal tension (and human angst) is around the data used to train AI. There’s been a lot of debate about whether feeding copyrighted material into a large language model is allowed. In the US, judges concluded that training a model using digitised books that had been lawfully bought was “transformative” and therefore fell under fair use. The model wasn’t simply reproducing the books and didn’t steal them in the first place; it was using them to learn how to generate new, different outputs.

That feels pretty reasonable, but the rules aren’t the same everywhere. In the UK, there’s currently no general fair use exception. Using copyright-protected material to train AI requires permission, unless it fits an exception like non-commercial research. The EU’s Digital Single Market Directive allows text and data mining (TDM), but only for research — and even then, rights holders can opt out.

The EU AI Act adds another layer: providers of general-purpose AI models have to respect those opt-outs, prove that the data used was lawfully accessed, and publish summaries of the copyrighted content they trained on.

Even if the rules aren’t uniform, the direction of travel is clear: transparency, accountability, and human authorship all matter – but the idea that AI is trained on copyright-protected material is also pretty normalised and we’re finding the limits of each law slowly, testing them case by case.

Why it feels complicated, but isn’t

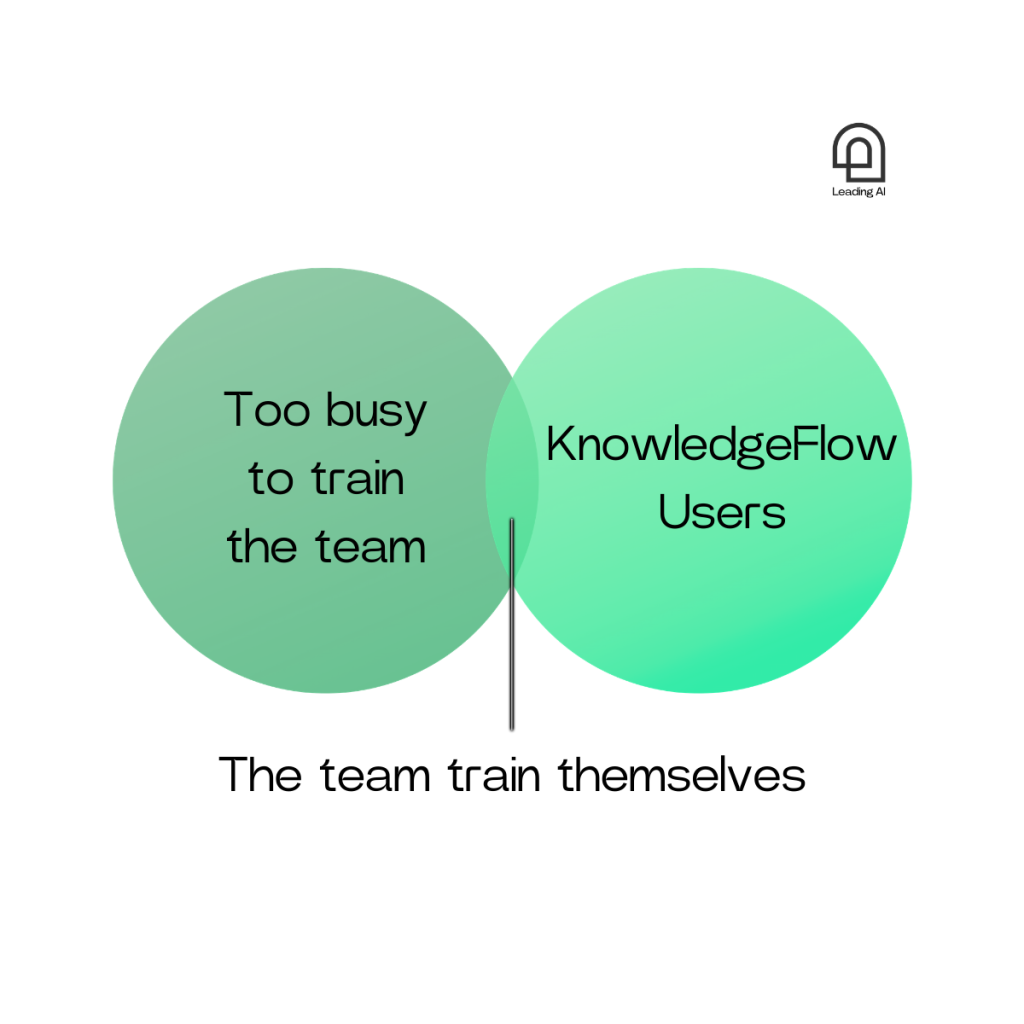

Part of the problem is that the process of using AI isn’t always intuitive. AI tools feel like magic, but legally speaking, what matters is whether the tool is helping you learn and create, or simply copying and repeating someone else’s work.

So yes, using an AI model to help summarise information, draft ideas, or write something in your organisation’s style is usually fine. That’s like using the library, during opening hours, and remembering to sign out the book and add a proper citation list to your essay. But if you feed in a third party’s content and then copy and paste what comes out — especially if it closely resembles the original — you’re probably crossing the line.

It also highlights the importance of those little tickboxes in your AI settings. When ChatGPT or Claude asks if you want to let them use your data to train their models, and you leave that switched on, you’re giving away more than just words — you’re offering up patterns, phrasing, examples, and insights that might resurface in future outputs for someone else. If you’re dealing with commercially sensitive work, or just things you feel a bit sensitive about, you should absolutely turn it off.

(That’s why we like to help people by creating private AI environments for things like bid-writing and internal reports. When you run a secure pipeline — where the AI model doesn’t retain or reuse your inputs — you keep full control. The final content is shaped in your house style, by your team, using your prompts. And because the model hasn’t stored the data, it’s still yours.)

And just to complicate things further, there are some models taking a different route altogether. Take DeepSeek, for example. Instead of training on scraped internet data, it was trained largely on the outputs of other open-source models. That means it’s building on work that’s already been released under permissive licences, which may avoid some copyright pitfalls. Or that approach may mean it’s like breaking into your house to steal the stuff you stole from the library.

What organisations should actually do

Now’s the time to recognise the direction of travel and get ahead of it. That means:

• Auditing your data: Know what’s being fed into models, where it came from, and any copyright rules.

• Controlling your settings: Don’t leave “train on my data” switched on unless you’re sure that’s OK.

• Using secure tools: For anything sensitive, make sure your AI pipeline doesn’t share data back to the model provider.

• Keeping humans in the loop: If you want the result to be protected, there has to be meaningful human input.

• Staying up to date: Keep an eye on legislative proposals and implementation timelines. We’ll try and help.

• Building internal policies: Give your team clear guidance. Make sure your use of AI aligns with both your values and the law.

The bottom line

At its core, this isn’t new territory. Intellectual property law has always been about protecting human creativity and balancing that with the public interest. Generative AI doesn’t change that.

The library analogy applies. You can use knowledge to learn. You can create something new. But you need to respect where that knowledge came from. Copying isn’t learning. Ownership still means something.

We think there’s a simple hack: be honest and transparent. If you do a piece of work with AI assistance, share the prompts you used. Normalise being open about how AI helped and what kind of AI you used and at what points you stepped back in to assure the output. You’ll generate both learning and an audit trail by default.